This entry is part of a series of #moznewslab posts that I’ll publish over the course of my time as a participant in the Knight-Mozilla learning lab. On the merits of a video idea“that will improve the way that online news is produced or experienced” I was invited to the second round of the Knight-Mozilla Fellowship. I’ll be using posts such as this to reflect and share what I’ve learned in class and develop my final open-source project, which I hope to be invited to prototype in the next round. Your feedback, positive and negative, is very encouraged and welcome. Seriously!

The last ten years have brought a lot of change to newsrooms and for people like myself, it has meant a golden age of creating content in new forms. I started as a writer who became a photojournalist who tinkered with adding ambiant sound and interviews to slideshows, then jumped on SoundSlides with gusto years later and followed the natural progression to video.

For the last half dozen or so years I’ve been producing multimedia stories for newspapers and combining my passion for documentary journalism with cinema and storytelling to produce some personally satisfying works.

Unfortunately, these videos pieces have a very short life span in most newsrooms I’ve worked out of.

Even if editors do everything right and the video ends up with multiple entry points from the photo/multimedia page, twitter feed, on the front page and is linked to the written story in every instance it appears, news video is still hard to find and view after it falls off the front page or becomes a day two video.

If it’s a video not associated with print content or a feature, then forget about it being seen unless you’re sending out the URL yourself to drive eyes to the important piece you just spent the last two weeks producing.

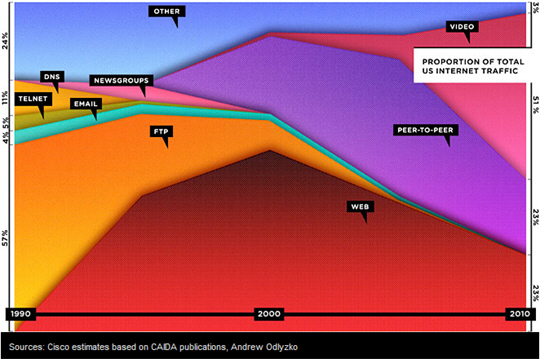

It’s the sad reality, and as viewership fails to catch on and bring in the important analytics, it’s getting harder for some to justify investment of time and money on video to the “higher ups” who want to monetize video. It sounds counter-intuitive you might say, video is the future of the web, it’s pretty much a given. As Wired illustrated in the below graph, the popularity of video is growing and accounts for nearly half of all web traffic.

It’s only going to grow further as mobile video comes into its own and set top boxes like Apple TV, Google TV, Roku, Tivo and others start to embrace ipTV, yet… I’ve seen it first hand in newsrooms where a memo comes down to stop producing video and to lay off video producers because they don’t see it as a wise investment.

The core issue here is that video isn’t generating revenue because it’s not being seen and it’s not being scene because the way current text-centric newspaper websites are designed, they don’t make finding video to watch easy. Classic chicken and the egg senario.

To solve the problem of making news video easier to discover and viewable to people who care to see it is actually pretty simple at the base level. We need to borrow the workflow and principles of the newsroom photo department which has suited us so well on the web already.

This is where VidScribe (name pending) come in, my idea for the #MozNewsLabs which earned me an invite to round two. It’s a workflow software to provide video IPTC-like embed data & automated transcription with a layer of crowd-sourced gamification, to make discovering video search friendly, social friendly and with a long tail.

The nitty gritty

There needs to be a method of crawling videos so that search engines can find them and provide them a long tail, or at this point, any tail.

Take for instance the news of Osama’s capture and death, if I wanted to see news reports, President Obama’s address, side bar videos and reactions to the news, like the majority of Internet users my first inclination was to search Youtube.

Youtube has a tagging and search system that produces quick results for minimal effort, and with a user base in the tens of millions, there’s a good likely hood that I’ll find what I’m looking for.

Youtube is better then a newspaper website, a TV station website or any search engine at finding the news I want to watch. Even better then the kingpin Google’s own Google Video search. Bing’s video search has made some strides and I personally find it to be the most accurate search engine video search, but again news videos from newspapers are seldom anywhere to be scene.

Right now video search online via Google, Yahoo or Bing (which is slightly better then its rivals, but not by much) produces very poor results if you’re looking for video published on a newspaper’s web site.

Newspaper websites run into this problem for three reasons.

- Virtually all news video today is an embedded flv file which carries with it very little meta-data, if a creator remembered to put it in there at all. Like flash, web crawlers can’t see what is in the video, only what is around it, such as a title and summery paragraph. HTML5 video will change some of this with more robust SEO, but it still won’t be a good solution, because search engines still won’t be able to scrub the content of the video.

- Second, newspapers chronically forget to include the video on the same page as the story, or they only link to a video page, which means even if I search a relevant written article I still may not see it’s correlating video. So as the news cycle progresses, usually in under a week videos are lost to the void and no combination of search engines on the web or the newspaper’s search function will ever find it again.

- Last, search tools on newspaper websites, save for a select few, rarely return any link to video. If the algorithm that crawls a news organization’s own website can’t find any video, the probability that a second or third party search engine will find it on the web at large won’t be much better.

Virtually all newspapers produce some sort of video and then they forget about the last mile. They forget about having their investment pay off for them by not making it easy to find, not monetizing it and sometimes just forgetting to include it on the page with the story.

This is unacceptable.

As a journalist who has produced news video, nothing stings harder than putting in hours of labor on deadline only to see the analytics show that no one is watching any videos because viewers don’t know it’s there. Within every video shooters circle of friends there are horror stories of stories that editor’s forgot to link to, promote or embed.

Long story short, videos need to be search-able. So how do we do that? Including story summaries is a good start, but videos also need the ability to be meta-tagged and categorized just like print copy and photography. Video also needs to have every word that is said in it be search-able.

Two birds, one stone

This can be accomplished in transcribing the video, but why not kill two birds with one stone, why not transcribe and then use that script for closed captioning. Web spiders are allowed a way to crawl content in the video and viewers are provided a service.

A service which may bring in additional viewership and allow for others to watch in places where playing the audio isn’t an option. (Like, ahem, at work when you’re already watching my slideshows.)

However, transcription can be time consuming and expensive. Granted 99% of today’s newspaper videos are in the two minute range and visual journalist can surly type up a transcript within a reasonable time, but what happens when newspapers finally realize the potential of long form video for online, tablets and services like Goggle TV? So, let future proof and simplify the process from the get go.

The solution to this problem is part workflow and part creation of new software.

First videos need to come with embedded data forms the same way pictures have IPTC data, and everything in that data set needs to be search-able by Google. Why Google? They’re the 800 pound gorilla in the room, that’s why.

Asset management program will allow for IPTC data to be added to video via fill forms which will have much of the same fields as pictures: data sets for author, geo-location, caption/summery and copyright permissions. One of those fill in boxes will provide a place to paste in a transcript for the video.

Automatic transcription software will be employed to extract every word spoken into a suitable format. Now, if you’ve used Google Voice, you know it’s not always accurate, so that’s where humans come into play.

First the software will highlight problem areas on review of it’s automated process to verify with a producer or visual journalist that it has got it right. For anyone who has used spell check, the process will be familiar, quick, painless and intuitive.

To improve accuracy, the software will also have options to create profiles for different reporters much in the same way visual journalist create profiles and actions for Photoshop and Aperture. For example, say Bill is always doing voice overs in his videos, once a profile is created for Bill the software will learn his speech patterns and will get more accurate over time.

Once an editor and copy editor have given the thumbs up to the video, it will be posted and then the last line of defense is employed.

Since the transcript is also used for closed captioning, a crowd source component can be implemented for viewers to submit corrections, report typos and spelling and grammar errors with the click of the button.

One of my favorite projects to come out of Knight News Challenge was Media Bugs, in essence this would be a video version of that open source project.

These user submissions will be channeled to the appropriate editor, the correction can be made and the viewer who submitted the correction will be awarded points to their profile on the website and eventually a status update or badge to encourage participation though gamification. If such a profile system does not exist at the newspaper website, the system allows for a thank you e-mail to be sent to the viewer.

With this approach, newspaper video will start showing up in searches on Google, more traffic is brought to the video department, videos suddenly have a long tail, users integration improves and the video archive becomes a valuable asset with copyrights and licensing contacts at the ready for historians, filmmakers and digital magazine producers.

This is not even considering the added values that a well organized and robust video offering can provide in future projects and technological changes. If next year video tablets become the norm, or TV on demand becomes number one, or if every newspaper creates a virtual TV channel on the iPhone, it’s going to be very easy and valuable to be able to adapt on the fly.

For example if an event like the bin Laden news or last weeks attack in Norway or the political spectacle of debt ceilings break at the eleventh hour, it’ll be very quick and easy to organize a video timeline of the rise and fall of the Al-Qaeda leader, Norway’s public security or voting history of congress members with a designer and data journalist to layer it over an interactive layout and publish it out to the web, mobile, tablets, social media, iOS apps, Google TV and a Hulu channel, all on deadline.

Collaborators welcome

In the spirt of open source and the notion that with many hands working together on the simple problems, together we’ll solve the big dilemmas, it is now that I turn to you in the community with a call to action.

I’m a media experimenter with a lot of newsroom knowledge and a pretty solid sense for how the web works, finding the right tools or plug-in to get the job done and how code is structured, but I fall short of the mad skillz necessary to write my own code.

If this project excites you, you have an idea to improve it, think it’s stupid or you just want a fundamentally challenge at changing the way we think about news video on the web, leave a comment or get in touch directly and let’s get connect.

@sdulai

Shaminder.Dulai <at> gmail.com

Later!

1 comment

Vonnie says:

Sep 18, 2012

It’s an amazing post for all the internet people; they will take advantage from it I am sure.